Three mysterious whales spent more than $10 billion on Nvidia’s AI chips this year

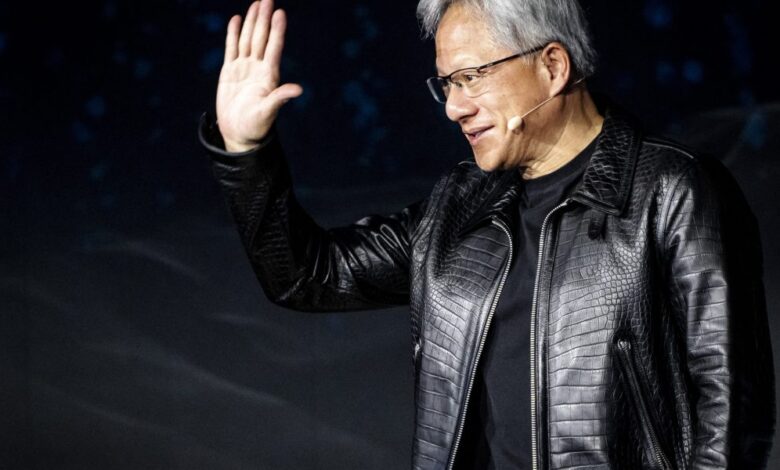

AI chip supplier Nvidiathe world’s most valuable company by market capitalization, remains heavily dependent on a handful of anonymous customers who contribute tens of billions of dollars in total revenue.

The AI chip darling again warned investors in its quarterly 10-Q filing with the SEC that it has accounts so important that each of its orders exceeds the 10% threshold of consolidated revenue Nvidia global.

For example, an elite trio of especially deep-pocketed customers individually bought between $10 and $11 billion worth of goods and services in the first nine months ending at the end of October.

Luckily for Nvidia investors, this won’t change anytime soon. Mandeep Singh, head of global technology research at Bloomberg Intelligence, said he believes founder and CEO Jensen Huang’s prediction that spending won’t stop.

“The data center training market could hit $1 trillion without any real pullback,” by which time Nvidia shares will almost certainly have dropped markedly from 90 % Present. But it can still reach hundreds of billions of dollars in annual revenue.

Nvidia remains supply constrained

Outside of defense contractors living near the Pentagon, it’s unusual for a company to concentrate such risk on a small number of customers – let alone one willing to be the first to value giant. 4 trillion USD.

Looking closely at Nvidia’s accounts on a three-month basis, yes four unknown whales total, including nearly every second of revenue in the second fiscal quarter, this time at least one of them has been eliminated as now only three still meet that criteria.

Singh said Luck Anonymous whales may include Microsoft, Meta and possibly Super Micro. But Nvidia declined to comment on this speculation.

Nvidia refers to them only as Customers A, B and C, and all say they purchased a total of $12.6 billion in goods and services. That’s more than a third of Nvidia’s total of $35.1 billion recorded in its third fiscal quarter to the end of October.

Their market share was also split equally with each taking 12%, suggesting they were able to get the maximum number of chips allocated to them rather than as many as they would ideally like.

This is consistent with founder and CEO Jensen Huang’s comments that his company limited supply. Nvidia cannot simply produce more chips because it already outsources its industry-leading AI ICs wholesale to Taiwan’s TSMC and does not have its own manufacturing facilities.

Intermediaries or end users?

Importantly, Nvidia’s designation of large anonymous customers as “Customer A”, “Customer B”, etc. is not static from one fiscal period to the next. They can and do change locations, with Nvidia keeping their identities secret for competitive reasons — certainly these customers will not like investors, employees, critics, activists Their competitors and competitors can see exactly how much they’re spending on Nvidia chips.

For example, a party designated “Customer A” purchased approximately $4.2 billion in goods and services during the last quarterly fiscal period. However, it appears to have accounted for less than in the past, as it did not exceed the 10% mark in the first nine months in total.

Meanwhile, “Customer D” appears to have done the exact opposite, reducing purchases of Nvidia chips in the last fiscal quarter but still accounting for 12% of year-to-date revenue.

Since their names are kept secret, it is difficult to say whether they are intermediaries like get into trouble Super small computerwhich provides hardware to data centers or end users like Elon Musk’s xAI. For example, the latter suddenly appeared to build its new one Memphis Compute Cluster in just three months’ time.

Longer-term risks for Nvidia include the move from training to inference chips

Ultimately, however, only a handful of companies have enough capital to be able to compete in the AI race because training large language models can be prohibitively expensive. Typically, these are cloud hyperscalers such as Microsoft.

Prophecy for example recently announced plans to build one zettascale data center with over 131,000 of Nvidia’s state-of-the-art Blackwell AI training chips, more powerful than any single site available today.

It is estimated that the amount of electricity needed to operate such a giant computer cluster would be equivalent to the output power of nearly two dozen nuclear power plants.

Bloomberg Intelligence analyst Singh really only sees some longer-term risks for Nvidia. First, some hyperscalers will likely reduce orders lastdilute its market share. One such likely candidate is AlphabetWhichever has it own The training chip is called TPU.

Second, its dominance in training is inconsistent with inference, which runs generative AI models after they have been trained. Here the technical requirements are not nearly at the state of the art, which means there is more competition not only from rivals such as AMD but also companies with their own custom silicon like Tesla. The final inference will be a much more meaningful business practice as more and more businesses use AI.

“There are a lot of companies trying to focus on that inference opportunity, because you don’t need the highest-end GPU accelerator chip for that,” Singh said.

When asked whether moving to inference in the long term was a bigger risk than losing market share in training chips, he replied: “Absolutely.”